December 15th 2022

Hi everyone, long time no see. I want to tell you about my journey through this development process. I have been working hard to implement the planned features of this project.

Developing systematic trading software is akin to an endless game of Tetris, where there are new features entering from the top of the screen constantly, which need to be slotted into place; infrastructure, automation, machine learning, execution strategies, and many more.

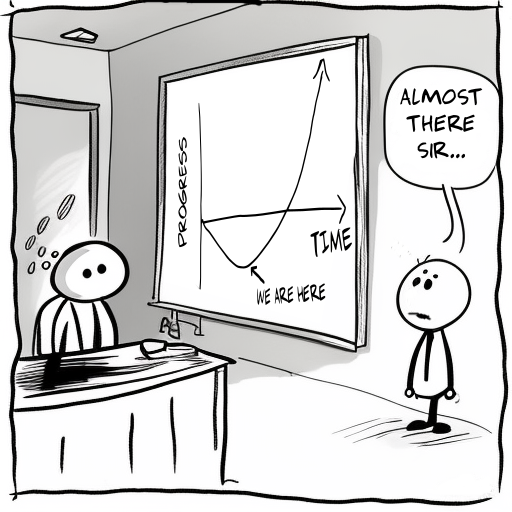

When I first started, I did not anticipate the sheer amount of work that would be involved in getting the project to this point. Designing and implementing everything from scratch has been a challenge; trading results were unpredictable and subject to wild swings. Researching and reading up on such a wide range of unrelated areas soon became a mammoth task. The software and infrastructure requirements were difficult enough, but manageable. However, trying to understand the financial markets, an area that was completely new to me, was a stretch. After months of going down the research-rabbit-hole and uncovering layer after layer of complexity, I came to the realisation that this was going to be a real challenge within a short timeframe. The more I learned, the more I realised how much more there was to learn. I had unwittingly Dunning-Kruger'ed myself.

Ah, crap.

But I kept persisting, slowly working on one item at a time. Some days it would be on execution, some weeks on machine learning, some months on ripping out sections and rewriting them. Slowly, I started to climb my way out of the bottom of the J curve with some small successes along the way, proving that the initial design philosophies were at least sound. Splitting each component into its own module and having individual microservices made it easier to modify functionality without breaking things, logically it made each component a building block. Luckily, I decided to limit my time spent on the traditional 'technical analysis' path that many day traders and plenty of academic papers seemed to focus on, instead favoring a statistical approach. The early machine learning experiments gave me a deeper understanding of the markets. While they proved that markets are mostly made up of financial noise, there was indeed some predictability which emerged - on very short timescales at least, from milliseconds to a handful of minutes, but this was not long enough to get in and out of a trade with profit. The trading fees that you paid to the broker or exchange killed the viability of most trades. However, at a minimum this did prove that my machine learning pipeline actually worked. What the system had learned to predict during training was validated while live trading. This was a bit of a breakthrough moment in the process which made me realise that I didn't have to create an institutional-grade feature list, I could focus on what was simple instead.

Scaling up this process to find more signals was going to require more sophistication and better infrastructure. There are many input parameters into a ML-based trading system: basic financial data (such as OHLCV and order book depth), alternative data (ranking tickers via market cap or how their rank position has changed over the last n days), infrastructure related data such as response times from individual exchanges (some crypto exchanges start to experience high load in the lead up to highly volatile periods). The number of parameters involved leads to a feature explosion problem, which in machine learning terms means that many tens of thousands of simulations would have to be run to work out which combinations are stable. Trying to brute force all of the parameters can be done via a technique called grid search, but this is not feasible in a reasonable timeframe. Handily there are optimisation software libraries out there such as Optuna which can reduce the number of simulations needed to a certain extent. Even so, running all of these simulations with Google Cloud meant that a lot of time had to be dedicated to automating the infrastructure as much as possible, not to mention being very expensive to run. Most of the servers were automatically set up where possible, using tools such as Terraform, Ansible, and various docker scripts, but it was becoming a big time sink to monitor and debug these components on top of the application code.

To reduce the infrastructure requirements and costs down to a more reasonable level, I scaled back and focused on higher timeframes running from one hour to one day. It's easier to find longer lasting effects in the data at these intervals, with the downside of having fewer potential trades. However, it's much easier for one person to manage as the strategies are fairly simple to understand - high level buy and sell signals are generated at hourly intervals, while the responsibility for entering and exiting positions is passed to a separate service which handles execution strategies at higher frequencies. While the Bottish software can handle trading events milliseconds apart, which is obviously pretty crappy by institutional standards, but I have found that even acting on events every 5 seconds is good enough for most tasks at this level of sophistication. Of course I would love to delve into higher frequency trading at some point, but the difficulty level really starts to increase: there is a lot of competition with smart people, you have to read academic papers and books and have a decent understanding of the math involved, and also be willing to commit a great deal of time to research. For now I am happy to clean up and refine what I already have in place. I realised that detecting anomalies in data is only the starting point - a lot of time still has to be spent on manually manipulating the data in Python and R as part of a seperate process to understand the reasoning behind why some effects exist, and then work back from there to implement a strategy. The big learning point for me is that not everything can be automated.

When I review the project, at this stage, what remains is a leaner and faster system. I have learned a great deal over the development process; I understand the markets much better, I didn't know anything about machine learning when I started, my development skills have greatly improved, and I have an appreciation for the challenges of designing distributed systems. I discovered that in the markets, if something can be predicted with high accuracy, usually that effect cannot be traded for profit, but it may give a hint on what to research in more depth. As I went along, I discovered communities in Slack and Discord groups that had like-minded individuals who could offer sage advice on what to focus on, although most things you have to discover yourself. I learned about pairs trading, arbitrage, carry, and other well-known methods (in the finance industry at least) that worked, and I thoroughly enjoyed all of this.

If you have made it this far, perhaps you are thinking of developing your own trading software? If you truly have a passion for this, then go for it! It can be a fun hobby to have on the side. You will learn a lot, from the most granular aspects to the major. And indeed, once all the systems are in place it can be relatively simple. One thing that I would suggest is to build upon some of the trading software out there now, which did not exist when I started :( Software like Quantconnect's LEAN which is really feature-complete these days. That allows you to focus on the infrastructure - some would say that's the difficult part. Just don't expect to profit immediately - it's going to take time.

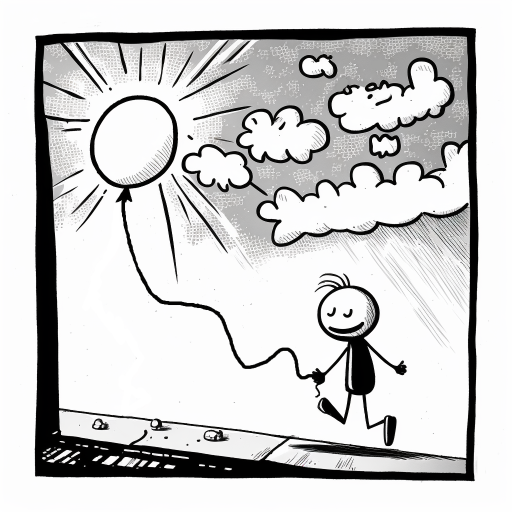

Peace at last.

I will put up a few posts detailing the technical changes to keep this site an accurate reflection of the progress made, for myself more than anything. In the near future, I also plan to open source a couple of the features on Github. There are two modules that others may find to be of use in their projects: the first being the custom ZMQ-based message broker that handles communication between the various microservices. It's a neat little thing that abstracts away the complexity of distributed tasks; it is resilient to disconnects and dropped messages through the use of state machines. I also would like to release part of the QT/pyqtgraph based charting component, which was helpful in debugging what was going on under the hood. A little bit of code cleaning is in order before they are ready for public release.